Program

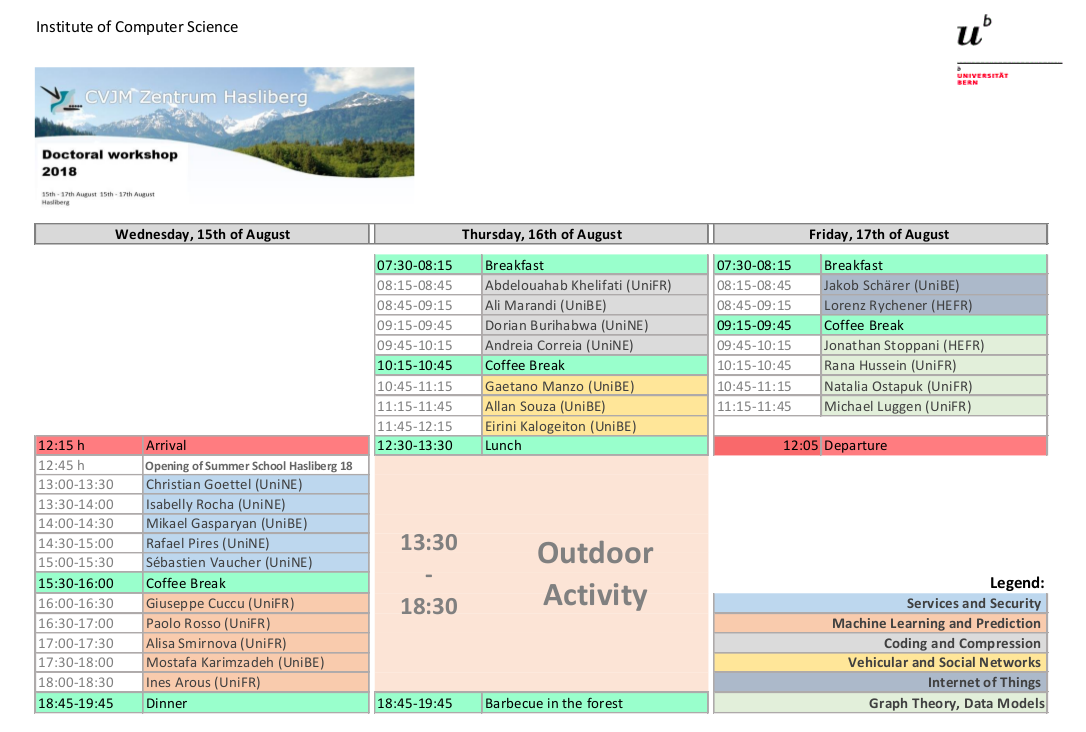

Day 1 - 15.08.2018:

| 12:45 | Opening of the Summer School Hasliberg 2018. |

| 13:00-15:30 | Services and Security (5 talks). |

|

13:00-13:30 |

Speaker: Christian Goettel (UniNE). |

| 13:30-14:00 | Speaker: Isabelly Rocha (UniNE). Title: Towards Energy Efficiency for Hardware-Assisted Secure Cloud Computing. Abstract: The cloud computing field has sustained its grown in the past years, leading to an increase on the number and size of data centers but also on the concern regarding security. One way to solve this problem is to use hardware-assisted memory protection mechanisms such as Intel SGX and AMD SEV, which isolates the memory of security-critical applications from the untrusted OS. However, such mechanisms introduce energy and efficiency trade-offs to the applications which are also great concerns in the area. Therefore, we would like to have those trade-offs well defined for different types of hardwares and, based on this propose an scheduling strategy that will find the optimal environment setup for a given task depending on its requirements. |

| 14:00-14:30 | Mikael Gasparyan (UniBE). Title: Fault-Tolerant Session Support for Service-Centric Networking. Abstract: The current Internet architecture is based on a host-to-host communication model, in which a requester has to possess an address of the provider to access content. In the current Internet model, information is exchanged through the TCP/IP protocol suite, which allows end-to-end communication in a multi-hop environment. Information-Centric Networking (ICN) is a next-generation Internet architecture. ICN proposes a fundamental paradigm shift in today’s Internet architecture. It aims to change the current host-to-host communication model to a content-oriented one, in which network organization is centered around content. SCN (Service-Centric Networking) is an extension of the Information-Centric Networking (ICN) architectural design. SCN enhances the ICN paradigm with service support. We have developed the first session support mechanism for SCN and extended it with link failure recovery capabilities. The first link failure recovery mechanism is based on Bloom filter propagation of session identifiers, the second design is based on service provider identifier propagation, and the third design uses a piggybacking technique for service provider identifier propagation. |

| 14:30-15:00 | Speaker: Rafael Pires (UniNE). Title: CYCLOSA - a decentralizing private web search through SGX-based browser extensions. Abstract: By regularly querying Web search engines, users (unconsciously) disclose large amounts of their personal data as part of their search queries, among which some might reveal sensitive information (e.g. health issues, sexual, political or religious preferences). Several solutions exist to allow users querying search engines while improving privacy protection. However, these solutions suffer from a number of limitations: some are subject to user re- identification attacks, while others lack scalability or are unable to provide accurate results. This paper presents CYCLOSA, a secure, scalable and accurate private Web search solution. CYCLOSA improves security by relying on trusted execution environments (TEEs) as provided by Intel SGX. Further, CYCLOSA proposes a novel adaptive privacy protection solution that reduces the risk of user re-identification. CYCLOSA sends fake queries to the search engine and dynamically adapts their count according to the sensitivity of the user query. In addition, CYCLOSA meets scalability as it is fully decentralized, spreading the load for distributing fake queries among other nodes. Finally, CYCLOSA achieves accuracy of Web search as it handles the real query and the fake queries separately, in contrast to other existing solutions that mix fake and real query results. |

| 15:00-15.30 |

Speaker: Sébastien Vaucher (UniNE). We describe EndBox, a system that securely executes middlebox functions on client machines at the network edge. Its design combines a virtual private network (VPN) with middlebox functions that are hardware-protected by a trusted execution environment (TEE), as offered by Intel's Software Guard Extensions (SGX). By maintaining VPN connection endpoints inside SGX enclaves, EndBox ensures that all client traffic, including encrypted communication, is processed by the middlebox. Despite its decentralised model, EndBox's middlebox functions remain maintainable: they are centrally controlled and can be updated efficiently. We demonstrate EndBox with two scenarios involving (i) a large company; and (ii) an Internet service provider that both need to protect their network and connected clients. We evaluate EndBox by comparing it to centralised deployments of common middlebox functions, such as load balancing, intrusion detection, firewalling, and DDoS prevention. We show that EndBox achieves up to 3.8x higher throughput and scales linearly with the number of clients. |

| 15:30-16:00 | (coffee break) |

| 16:00-18:30 | Machine Learning and Prediction (5 talks). |

| 16:00-16:30 | Speaker: Giuseppe Cuccu (UniFR). Title: Understanding Reinforcement Learning, Neural Networks and Evolutionary Computation. Abstract: This talk introduces the audience to the three Machine Learning keywords in the title and their scope. (i) Reinforcement Learning is a learning paradigm applicable where no labeled data is available, by optimizing a generic quality score defined over candidate solutions. (ii) Neural Networks are presented (and demystified) as parametrized generic function approximators, capable of reconstructing any function to arbitrary precision. (iii) Evolutionary Computation is a family of powerful black-box optimization algorithms, which search in high-dimensional parameter spaces without knowledge of the parameters' meaning. Finally, the framework of RL Neuroevolution is proposed as a synergy of all three, addressing complex problems in a robust and scalable manner. |

| 16:30-17:00 | Speaker: Paolo Rosso (UniFR). Title: Hybrid Query Expansion engine based on Machine Learning and Crowdsourcing. Abstract: Several vertical job search engines are available to support the online job search. However, using these search engines could be challenged for both job seekers and recruiters. The issue is that there exists no standard to write job postings. Often, companies use terms and expressions to describe job postings that cannot be obvious to job seekers, by making difficult the mapping of the user query intent to the specialized and evolving vocabulary of the job postings. In this PhD work, we propose a new Query Expansion engine to create domain-specific word similarity models using machine learning techniques. The idea is to use embeddings to find automatically related terms to a particular query. Specifically, we learn text embedding models that are trained to reconstruct linguistic contexts of words appearing in the job postings and their titles. Furthermore, we generate taxonomy embedding models to learn hierarchical structured information such as, knowledge and skills. The two models are jointly learnt to preserve the relations between concepts in the taxonomy and the concurrences of words in the postings. Moreover, we use crowdsourcing to continuously monitor, test and improve these models. More precisely, the crowdsourcing component supports the machine learning models by asking the crowds to provide training samples, validate the engine output, or provide manual query expansion in real-time. |

| 17:00-17:30 | Speaker: Alisa Smirnova (UniFR). Title: Relation Extraction Using Distant Supervision. Abstract: Relation extraction is an essential part of Information Retrieval and Natural Language Understanding. The idea is to extract structured data from text in order to make it machine-readable or processable. The significant limitation of applying machine learning algorithms in this case is lack of annotated data. Distant supervision paradigm was proposed to overcome this limitation by automatically obtaining relation labels from a knowledge base. However, this automatically labeled data contains noise (false positives and false negatives). A number of approaches were proposed for noise reduction and it still remains an active research area. That is also area of my research. I start my presentation with explaining the concept of distant supervision and describing state-of-the-art approaches for relation extraction. I present our approach for relation extraction that is based on convolutional neural networks. In the end of my presentation I highlight the next prominent directions that I’ll be working on. |

| 17:30-18:00 | Speaker: Mostafa Karimzadeh (UniBE). Title: Pedestrians Complex Behavior Understanding and Prediction Using Mobility Markov Chain Model. Abstract: The growing ubiquity of smart-phones equipped with built-in sensors and global positioning system (GPS) has resulted in the collection of large volumes of mobility data without the need of any additional devices. The large size of heterogeneous mobility data gives rise to rapid development of location-based services (LBSs). The predictability of mobile users’ behavior is essential to enhance LBSs. To predict human mobility, many techniques have been proposed. However, existing techniques require good data quality to guarantee optimal performance. In this paper, we proposed a hybrid Markov chain to predict mobile users’ future locations. Our model constantly adapts to available user trace quality to select either the first order or the second order Markov chain. Compared to existing solutions, our model is adaptive to discrete gaps in data trace. In addition, we implemented a proper mechanism to predict congestion in city areas. To help us understanding complex user behaviors, we have also proposed a technique benefiting both temporal and spatial parameters to extract Zone of Interests (ZOIs). To evaluate the algorithms performance, we use a real-life dataset from the Nokia Mobile Data Challenge (MDC) collected around Lake Geneva region from 180 users. Experiment results show that our approach could achieve a satisfying location prediction accuracy as well as area congestion prediction for most of the users. |

| 18:00-18:30 | Speaker: Ines Arous (UniFR). Title: Trend Prediction in Heterogeneous Data Streams. Abstract: Trend prediction is a key task in many real-world application domains and a well-studied problem for data at rest. The emergence of data streams in many applications brings new challenges with the data constantly updated and being heterogeneous, i.e., of different types. Take the example of the fashion field, where retailers have to take into account both the sales data, which is numerical, and user’s comments/feedback from social networks, which is textual, to perform the prediction of next trends. Classical prediction techniques fall short in producing accurate results in such context. In this thesis, we plan to investigate the problem of trend prediction in heterogeneous data streams using a two-fold paradigm. Firstly, we will investigate the use of correlation to perform online trend prediction. We propose to adopt a time series-based solution able to incrementally compute the prediction as the data gets updated. A prior online classification is required to improve the result of the prediction. Secondly, we will investigate the trend prediction problem using a social network-based solution where we take advantage of both numerical data, e.g., number of retweets or number of likes, and textual data, e.g., tweets or reviews. We will design a solution to identify the social influencers who have a great impact in their followers. For example, fashion influencers encourage their followers to buy a new product or try a new brand and they can even initiate a new fashion trend. In order to efficiently find these influencers, we plan to resolve to a human in the loop approach that leverages crowd networks through peer routing. The aim is to involve individuals and harness their social relationships for influencers finding. |

| 18:45-19:45 | (dinner) |

Day 2 - 16.08.2018:

| 7:30-8:15 | (breakfast) |

| 8:15-10:15 | Coding and Compression (4 talks). |

| 8:15-8:45 |

Speaker: Abdelouahab Khelifati (UniFR). |

|

8:45-9:15 |

Speaker: Ali Marandi (UniBE). Title: Bloom filter-based content discovery in Information-Centric Networks. Abstract: Information-Centric Networking (ICN) aims at decoupling content objects from locations, in contrast to IP networking that binds content objects to specific servers. Thus, routing protocols that have been proposed for IP networks do not work for ICNs. Hence, designing routing protocols for ICNs has recently called much attention. We focus on Named Data Networking (NDN), which is the most prominent ICN-based architecture and propose Bloom filter-based routing protocols for NDN. Our Bloom filter-based routing protocols have the following merits. 1) fully content oriented and distributed, 2) does not need any IP-based fall-back mechanism, 3) small routing overhead and less complex name matching, 4) resilient to link failures. We also address scalability issues with large content universes. In this talk, we discuss our Bloom filter-based routing protocols in detail. |

| 9:15-9:45 | Speaker: Dorian Burihabwa (UniNE). Title: RECAST - Random Entanglement for Censorship-resistant Archival STorage. Abstract: Users entrust an increasing amount of data to online cloud systems for archival purposes. Existing storage systems designed to preserve user data unaltered for decades do not, however, provide strong security guarantees - at least at a reasonable cost. This paper introduces RECAST, an anti-censorship data archival system based on random data entanglement. Documents are mixed together using an entanglement scheme that exploits erasure codes for secure and tamper-proof long-term archival. Data is intertwined in such a way that it becomes virtually impossible to delete a specific document that has been stored long enough in the system, without also erasing a substantial fraction of the whole archive, which requires a very powerful adversary and openly exposes the attack. We validate RECAST entanglement approach via simulations and we present and evaluate a full-fledged prototype deployed in a local cluster. In one of our settings, we show that RECAST, configured with the same storage overhead as triple replication, can withstand 10% of storage node failures without any data loss. Furthermore, we estimate that the effort required from a powerful censor to delete a specific target document is two orders of magnitude larger than for triple replication. |

| 9:45-10:15 |

Speaker: Andreia Correia (UniNE). |

| 10:15-10:45 | (coffee break) |

| 10:45-12:15 | Vehicular and Social Networks (3 talks). |

| 10:45-11:15 |

Speaker: Gaetano Manzo (UniBE). |

| 11:15-11:45 | Speaker: Allan Souza (UniBE). Title: Context-aware Vehicular Traffic Re-routing based on Pareto-Optimality - A Safe-Fast Use Case. Abstract: Vehicular traffic re-routing is the key to provide better traffic mobility. However, considering just traffic-related information to recommend better routes for each vehicle is far from achieving the desired requirements of a good Traffic Management System, which intends to improve not only mobility, but also driving experience and safety of drivers and passengers. Context-aware and multi-objective re-routing approaches will play an important role in traffic management. Yet, most approaches are deterministic and can not support the strict requirements of traffic management applications, since many vehicles potentially will take the same route, consequently degrading overall traffic efficiency. In this way, this work introduces an efficient algorithm based on Pareto-optimality for dealing with such problem. In addition, we focus on the improvement of traffic mobility and public safety during the route recommendation. Thus, methods for building pieces of knowledge about ongoing traffic conditions and risky areas based on city-wide criminal activities are presented. Simulation results have shown that our proposal provides a better trade-off between mobility and safety than state-of-the-art approaches and also avoids the problem of potentially creating different congestion spots |

| 11:45-12:15 |

Speaker: Eirini Kalogeiton (UniBE). |

| 12:30-13:30 | (lunch) |

| 13:30-18:30 | (outdoor activity) |

| 18:45-19:45 | (barbecue in the forest) |

Day 3 - 17.08.2018:

| 7:30-8:15 | (breakfast) |

| 8:15-9:15 | Internet of Things (2 talks). |

| 8:15-8:45 |

Speaker: Jakob Schärer (UniBE). |

| 8:45-9:15 | Speaker: Lorenz Rychener (HEFR). Title: Machine Learning for Anomaly Detection in Time-Series Produced by Industrial Processes. Abstract: An anomaly in data is a measurement that deviated from the standard, the normal distribution or the expected behaviour and the detection of such an event has application cases in many fields. In the wake of Industry 4.0 and the Internet of Things (IoT), which both are pushing for the digitalization of many industrial processes, the demand for anomaly or fault detection based on time series is on the rise. A hand crafted rule-based systems is a cumbersome task to implement, that is not suited to detect unknown and/or rare anomalous events. We would like to discuss the different challenges that industrial anomaly detection is facing and show why Machine Learning (ML) has the potential to offer solutions to these challenges. |

| 9:15-9:45 | (coffee break) |

| 9:45-11:45 | Graph Theory, Data Models (4 talks). |

| 9:45-10:15 |

Speaker: Jonathan Stoppani (HEFR). An alternative technique is to use graphs to represent a reference encompassing multiple variants and alternative loci in order to provide higher fidelity results. The challenges with such an approach are mainly due to the volume of the data to represent, store and process, as well as to the more complex algorithms needed to work with graphical data structures. The goal of my PhD thesis is to enhance an existing toolchain implementing static graphical references with support for dynamic data structures and concurrent querying as provided by modern (R)DBMS. |

| 10:15-10:45 | Speaker: Rana Hussein (UniFR). Title: Heterogeneous Graph Embeddings. Abstract: The graph embedding paradigm projects nodes of a graph into a vector space, which can facilitate various downstream graph analysis tasks such as node classification and clustering. To efficiently learn node embeddings, graph embedding techniques usually preserve the proximity between node pairs sampled from the graph using random walks. Heterogeneous graphs embedding techniques typically rely on meta-paths (i.e., fixed sequences of node types) for generating node embeddings. However, using these meta-paths either requires prior knowledge from domain experts for optimal meta-path selection, or requires extended computations to combine several meta-paths. To overcome this issue, we propose a heterogeneous graph embedding technique using random walks which probabilistically balances traversing homogeneous and heterogeneous edges, as well as the node distribution over different domains. These random walks are fed into a SkipGram model for generating the node embeddings. We evaluate our method on three heterogeneous graph datasets, using two graph analysis tasks. |

| 10:45-11:15 | Speaker: Natalia Ostapuk (UniFR). Title: Deep Active Learning for Link Prediction in Knowledge Graphs. Abstract: Neural networks has recently proven to be effective in link prediction for knowledge graph construction. Existing research has mainly focused on designing 1) deep neural network models that are expressive, e.g., NTN and ConvE, which are however not scalable to large-scale training data; or 2) shall models that are scalable, e.g., TransE and DistMult, however limited in capturing expressive features. In this work, we demonstrate that the amount of training data can be drastically reduced when deep neural networks are combined with active learning. We introduce a novel deep active learning algorithm for link prediction, which inherits from recent advances in Bayesian deep active learning and extends existing work by exploiting links between entities to improve sampling effectiveness. To accelerate model training, we further propose an incremental training method that allows deep neural networks to be incrementally trained in each iteration of the active learning process. Extensive validation on real-world datasets shows that our approach is able to achieve nearly state-of-the-art performance with just a small fraction of the original training data. |

| 11:15-11:45 |

Speaker: Michael Luggen (UniFR). |

| 12:05 | (departure) |